This meant that the before() function in the next set of tests would successfully connect to the dying instance of dynamoDB. I found when I had multiple test files, each with their own begin() & after() functions that did the same as these, even though kill had purported to have killed the processed (I checked the killed flag), it seemed the process hadn't died immediately. The important point to note here is the use of the sleep package & function. Here, the local instance of dynamoDB is spawned using the child_process package and reference to the process retained. This is handled in the begin() & after() functions. Rather than attempting to create & destroy the dB for each Unit Test, I settled with creating it once per Unit Test file. The simplest way to achieve this I thought was to create an in-memory of dynamoDB and destroy it after each Unit Test.Ĭonst spawn = require( " child_process").spawn Īws.config.update() before()/after() - Creating/Destroying dynamoDB My goal was to have a clean dB for each individual Unit Test. In order to run this locally, you'll need: I would use async and await but the latest version of Node that AWS Lambda supports doesn't support them :-( I don't like the callback pyramid so I use Promises where I can.I'm a JavaScript, Node, AWS n00b so this if you spot something wrong or that'd bad please comment.Instead, I export the underlying functions & objects that the AWS Lambda uses and Unit Test these. I don't Unit Test the actual AWS Lambda function.As these tests are running against a dB, it might be more accurate to call them Integration Tests implemented using a Unit Testing framework, but I'll refer to them as Unit Tests (UTs).It's quite easy to write Unit Tests that run against a live version of DynamoDB but I wanted to run against a local instance, ideally an in-memory instance so that it would be quick (not that running against a real instance is that slow) and so that I could have a clean dB each time.

#LOCAL DYNAMODB FLAKY TESTS CODE#

My AWS Lambda code is mostly AWS Lambda agnostic except for the initial handler methods, this makes them fairly testable outside of AWS.

Here’s the beginning of the CloudFormation script that sets up the S3 bucket, named based on the stack name you pass when you create it.I'm writing the backend for my current iOS App in JavaScript using node.js, AWS Lambda along with DynamoDB. A sample applicationįor this post, you’ll deploy a simple Angular JavaScript application to a public S3 bucket for testing.

Whatever you create and whatever bills you generate are your responsibility. Proper permissions for running these samplesĪn AWS administrator will need to grant access to the following permissions:īe careful to work with an AWS account with credits.

#LOCAL DYNAMODB FLAKY TESTS HOW TO#

How to reset the environment before testing.A working local Cypress testing project.An AWS Account with permissions to create S3 Buckets, run CloudFormation, and manage AWS CodeBuild jobs.You’ll need several things to run Cypress on AWS: Requirements for running Cypress on CodeBuild

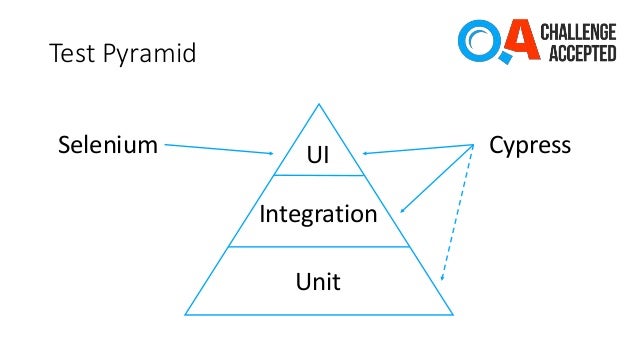

This blog post contains helpful information to configure CodeBuild on AWS to run Cypress. But using it in a continuous integration environment like AWS CodeBuild requires some additional steps compared to running it directly on your own computer. Cypress is a relatively new web testing tool that is easier to use than Selenium, and it’s gaining in popularity.

0 kommentar(er)

0 kommentar(er)